BriMor Labs

Welcome to the BriMor Labs blog. BriMor Labs is located near Baltimore, Maryland. We specialize in offering Digital Forensics, Incident Response, and Training solutions to our clients. Now with 1000% more blockchain!

Thursday, September 5, 2019

Small Cedarpelta Update

Good morning readers and welcome back! This is going to be a very short blog post to inform everyone that a very minor update to the Cedarpelta version of the Live Response Collection has been published. This change was needed, as it was pointed out by an anonymous comment, that when a user chose one of the three "Secure" options, the script(s) failed due to an update to the SDelete tool. I changed the module to ensure that it works properly with the new version of the executable and published the update earlier this morning. As always, if you have any feedback or would like to see additional data be collected by the LRC, please let me know!

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Thursday, June 20, 2019

Phinally Using Photoshop to Phacilitate Phorensic Analysis

Hello again readers, and welcome back! Today's blog post is going to cover the process that I personally use to rearrange and correlate RDP Bitmap Cache data in Photoshop. Yes, I am aware that some of you know me primarily for my Photoshop productions in presentations and logos (and HDR photography, a hobby I do not spend nearly enough time on!), but the time has finally come when I can utilize Photoshop as part of my forensic analysis process!

First off, if you are not aware, when a user establishes an RDP (Remote Desktop Protocol) connection, there are files that are typically saved on the user’s system (the source host). These files have changed in name and in format over the years, but commonly are stored under the path “%USERPROFILE%\AppData\Local\Microsoft\Terminal Server Client\Cache\”. You will usually have a file with a .bmc extension, and on Windows 7 and newer systems, you will also likely see files that are named “cache000.bin” (these are incrementally numbered starting at 0000). This was introduced on Windows 7 and should be searchable by the naming convention of “cache{4-digits}.bin”. Both files contain what are essentially small chunks of screenshots that are saved of the remote desktop. The most reliable tool that I have found to parse this data is bmc-tools, which can be downloaded from https://github.com/ANSSI-FR/bmc-tools. The process for extracting the data is straight-forward, you point the script at a cache####.bin file, and extract it to a folder of your choice. Once done, you end up with a folder filled with small bitmap images.

First off, if you are not aware, when a user establishes an RDP (Remote Desktop Protocol) connection, there are files that are typically saved on the user’s system (the source host). These files have changed in name and in format over the years, but commonly are stored under the path “%USERPROFILE%\AppData\Local\Microsoft\Terminal Server Client\Cache\”. You will usually have a file with a .bmc extension, and on Windows 7 and newer systems, you will also likely see files that are named “cache000.bin” (these are incrementally numbered starting at 0000). This was introduced on Windows 7 and should be searchable by the naming convention of “cache{4-digits}.bin”. Both files contain what are essentially small chunks of screenshots that are saved of the remote desktop. The most reliable tool that I have found to parse this data is bmc-tools, which can be downloaded from https://github.com/ANSSI-FR/bmc-tools. The process for extracting the data is straight-forward, you point the script at a cache####.bin file, and extract it to a folder of your choice. Once done, you end up with a folder filled with small bitmap images.

Now begins the phun part! The bitmaps will need to be rearranged manually to reconstruct the screenshot as best as is possible (like a jigsaw for forensic enthusiasts). This is not an exact science, and it relies on educated best-guess in many cases. While this could be a more manual and tedious process, Adobe Photoshop can be used to automate the import of the files. Then you can rebuild the item(s) as you see fit!

First, view the contents of the folder in Windows Explorer, or Adobe Bridge (included in Adobe Photoshop CC bundle) for Mac users. I found Preview does not work, it does not render the bitmaps properly. Rather than spending valuable time trying to figure out why that is, I just used Bridge.

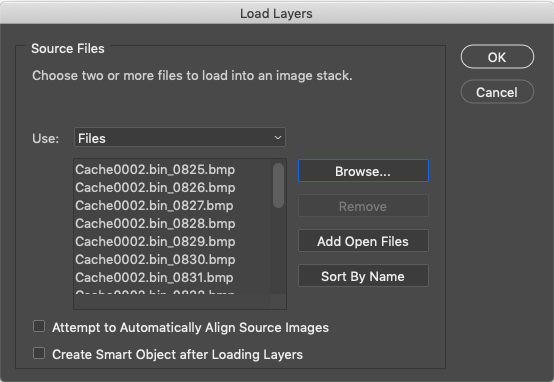

Next select the bitmaps of the activity you’d like to reconstruct, go into Photoshop, and choose "File-Scripts-Load Files into Stack...":

| |

|

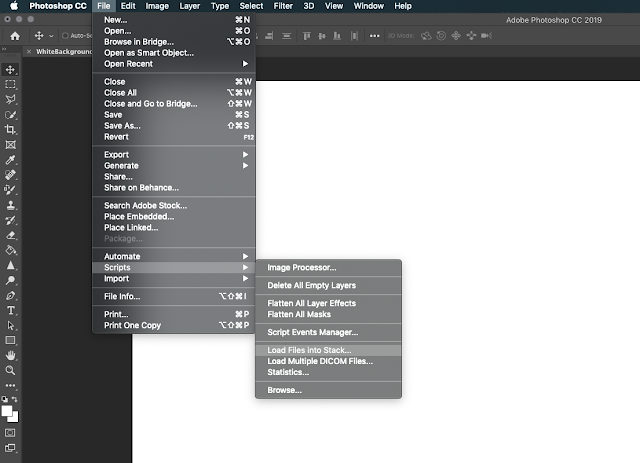

This will allow you to choose multiple files, to import into Photoshop all at once. You will be presented with a “Load Layers” option. Select the “Browse” button, and then browse to the folder that contains the bitmap files you wish to load:

|

| The "Load Layers" dialogue box. In order to choose the file(s) you want to open, click "Browse..." |

|

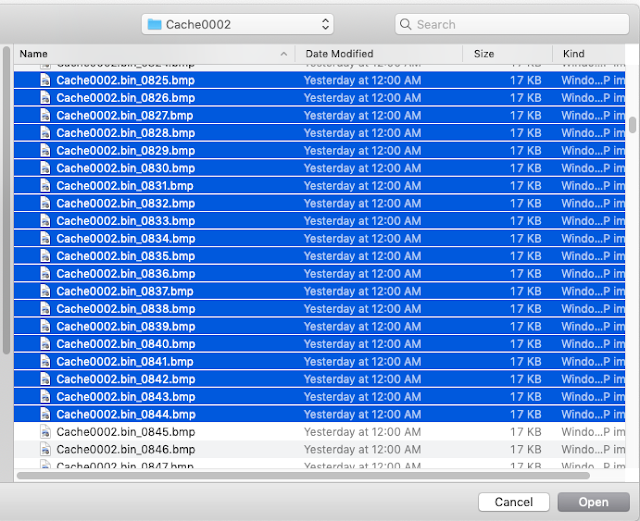

| Choose the files that you wish to load |

Once you’ve selected the bitmap files, you will see the “Load Layers” box is populated with those files:

| |

|

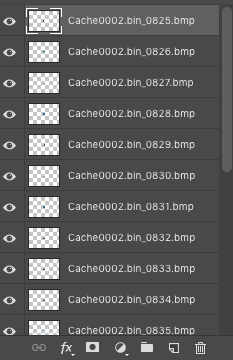

|

| Paste the layers into your original workspace, and rearrange them to rebuild the activity! |

| |

|

I truly hope that this small tutorial helps with your process and workload should you find yourself rebuilding RDP session activity. For readers who do not currently own Photoshop, Adobe has a very inexpensive offering of the Adobe Creative Cloud (CC) for a personal license under the Photography plan, which is $9.99 a month. It is a great deal and one that I have used for my photography hobby for many years. And now on forensic analysis cases that involve RDP bitmap reconstruction!

Thursday, April 11, 2019

Live Response Collection - Cedarpelta

Hello again readers and welcome back!! Today I would like to announce the public release of updates to the Live Response Collection (LRC), which is named "Cedarpelta".

This may come as a surprise to some as Bambiraptor was released over two years ago, but over the past several months I've been working on adding more macOS support to the LRC. Part of the work that went into this version was a complete rewrite of all of the bash scripts that the LRC utilizes, which was no small task. Once the rewrite was completed, then I focused on my never-ending goal of blending speed, comprehensive data collection, and internal logic to ensure that if something odd was encountered, the script would not endlessly hang or, even worse, collect data that was corrupted or not accurate. So, lets delve into some of the changes that Cedarpelta offers compared to Bambiraptor!

Windows Live Response Collection

To be honest, not a whole lot has changed on the Windows side. I added a new module at the request of a user, that collects Cisco AMP databases from endpoints, if the environment utilizes the FireAMP endpoint detection product. The primary reason for this is that the databases themselves contain a WEALTH of information, however users of the AMP console are limited to what they can see from the endpoints. The reason for this is likely because it would take a large amount of bandwidth and processing power to process every single item collected by the tool. Since most of this occurs within AWS, the processing costs would scale considerably, which in the end would end up costing more money to license and use.* (*Please note that I am not a FireAMP developer, and I do not know if this is definitely the case or not, but from my outsider perspective and experience in working with the product, this explanation is the most plausible. If any developers would like to provide a more detailed explanation, I will update this post accordingly!)

MacOS Live Response Collection

This is the section that has had, by far, the most work done to it. On top of the code rewrite, which makes the scripting more "proper" and also much, much faster, new logic was added to deal with things like system integrity protection (SIP) and files/folders that used to be accessible by default, but now are locked down by the operating system itself. Support has been added for:

- Unified Logs

- SSH log files

- Browser history files (Safari, Chrome, Tor, Brave, Opera)

- LSQuarantine events

- Even more console logs

- And many, many other items!

One of the downsides to the changes to macOS is the fact that things like SIP and operating system lock downs prevent a typical user from accessing data from certain locations. One example of this is Safari, where by default you cannot copy your own data out of the Safari directory because of the OS protections in place. There are ways around this, by disabling SIP and granting the Terminal application full disk access under Settings, but since the LRC was written to work with a system that is running with default configurations, it will attempt to access these protected files and folders, and if it cannot, it will record what it tried to do and simply move on. Some updates that are in the pipeline for newer version of macOS may also require additional changes, but we will have to wait for those changes to occur first and then make the updates accordingly.

You will most likely no longer be able to perform a memory dump or automate the creation of a disk image on newer versions of macOS with the default settings, because of the updates and security protections native within the OS internals. As I have stated in the past, if you absolutely require these items I highly recommend a solution such as Macquisition from BlackBag. The purpose of the LRC is, and will always be, to collect data from a wide range of operating systems in an easy fashion, and require little, if any, user input. It does not matter if you are an experienced incident response professional, or directed to collect data from your own system by another individual, you simply run the tool, and it collects the data.

Future Live Response Collection development plans

As always, the goal of the Live Response Collection is not only to collect data for an investigation, it is also able to be customized by any user to collect information and/or data that is desired by that user. Please consider taking the time to develop modules that extract data and share modules that you have already developed. The next update of the LRC will focus on newer versions of Windows (Windows 10, Server 2019, etc). I personally am still encountering very few of those systems in the wild, but that is mostly because I tend to deal with larger enterprises where adoption of a new operating system takes considerable time, compared to a typical user that runs down to Best Buy and has a new Windows 10 laptop because the computer they used for a few years no longer works.

Remember, a tool is a tool. It is never the final solution

One last note that I would like to add is that please remember that while a lot of work has been put into the LRC to "just work", at the end of the day, it is just a tool that is meant to be used to enhance the data collection process. There are many open source tools that are available to collect data, perhaps more than ever before, and one tool may work where another one failed.

For example, you might try the CrowdStrike Mac tool and it might work where the LRC fails, or vice versa. Or you may try to use Eric Zimmerman's kape on a Windows machine, but it fails because the .NET Framework was not installed. Or you might try to use the LRC on a system running Cylance Protect and it gets blocked because of the "process spawning process" rule.

In each case you have to give various tools and methods a shot, with the end goal of collecting the information that you want. It is important to remember that YOU (the user of the tool) are the most valuable aspect of the data collection process, and you simply utilize tools to make the collection process faster and smoother!

LiveResponseCollection-Cedarpelta.zip - download here

MD5: 7bc32091c1e7d773162fbdc9455f6432

SHA256: 2c32984adf2b5b584761f61bd58b61dfc0c62b27b117be40617fa260596d9c63

Updated: September 5, 2019

Tuesday, November 27, 2018

Skype Hype/Gripe

Hello again readers and welcome back! Based off the title of this blog post, I am pretty sure that you already know that we will be covering Skype in this post. As with any good story, it is best to start at the beginning of this magical journey....

Chapter 1: Data generation

As some of you may know, I have been involved with the Cyber Sleuth Science Lab since this summer, working on bringing STEM (specifically digital forensics) to high school aged students. This project requires a tremendous amount of behind the scenes work, especially in the scenario data generation realm. For the next phase of the project, we decided that utilizing Skype would be the best chat application to use, because not only could we generate data across several platforms, but we can also extract it in a reliable method from mobile devices. So, I created some Skype conversations on my test device (a Samsung Galaxy S6 Edge, specifically SM-G925F (remember the model number, you will see it again)), made a full image of it, and loaded it into Magnet AXIOM, which the folks at Magnet Forensics have generously made available to the Cyber Sleuth participants. And, surprisingly, I was met with this:

|

| Processing in AXIOM found the "main.db" file, but nothing else |

Obviously I knew something wasn't right, as I definitely populated data in the application, which I could easily see. But it was not detected in AXIOM, so I had to dig into this a bit more.

Chapter 2: A sorta "new" discovery. Kinda-ish

Obviously one of the main aspects in our career field is that we trust tools to extract known data from known places, but at any time that can change and we have to update our methods (and tools) accordingly. I told Jessica about a possible new discovery when I found this at the beginning of November, which I had to put aside for a few weeks as I took part in an incident response case. This week I was able to jump back into it again, and worked on trying to figure out exactly "what" was happening here. Initially, the thought was that Skype had changed a whole bunch of stuff. As it turns out (thankfully) that is not quite the case, but it does bring up a couple of issues to keep in mind if you are looking at mobile devices with Skype usage.

The first item to note is the presence of the file "main.db". Historically this was located under the "databases" folder, but now it is located under the "files" folder, specifically under the subfolder "live#<username>".

|

| The main.db file, historically, was not in this location |

|

| Contents of "main.db". Notice the lack of data in the tables |

There is a "databases" folder though, so naturally the next step was to look there. Sure enough, this contained what looks like the database(s) we are looking for

|

| The "new" contents of the "databases" folder |

Chapter 3: Parsing the data

Now that I had identified my database, it was a question of figuring out what table was going to contain what I was looking for. Fortunately most of the tables follow an easy to recognize naming conventions, so I focused my efforts on the "chatItem" table

|

| The table "chatItem" from the user database |

I need to do some more testing on what the flags actually mean, but for now this particular SQLite query should work quite well if you also come across it (please keep reading though, as there are caveats on WHEN to use this query)

SELECT DATETIME(chatItem.time / 1000, 'unixepoch') as "Date/Time (UTC)", person_id as Sender, content as Message, CASE type WHEN 9 THEN "Received" WHEN 10 THEN "Sent" WHEN 12 THEN "Multimedia Sent" WHEN 1 THEN "Unknown" END as "Type", status as "Status", CASE deleted WHEN 1 THEN "Yes" WHEN 0 Then "" END as "Deleted", edited as "Edited", retry as "Retry", file_name as "Multimedia File Name", device_gallery_path as "Multimedia Path On Device" FROM chatItem

Once you input the above SQLite query, you can end up with a nicely formatted output which is easy to look at, like this (the output was pasted from SQLite Spy to a tsv file, then opened and formatted in Excel)

|

| Cleaned up Skype chats |

Chapter 4: What the heck is going on here??

After much discussion on exactly "what" was happening here with Jessica, it turns out that it is actually a couple of things that all combined to have the data stored like this.

First of all, remember the model number that I listed? Well, this is the Global version of the Galaxy S6 Edge. That means that some apps are pre-bundled, and in this case Microsoft apps (including Skype) were included by default. After I updated the app to what looked like the latest version on the Play Store, I did my data pull (I chose to do this, rather than pull from APK Mirror, because I wanted to see if the latest app version was supported with my tools). However, one important caveat to note, is that this device is running Android 5.1.1 (because it is super easy to root an older version of Android and get a full image of the device, which is what is needed for the data analysis portion of the Cyber Sleuth workshop). Android 5 currently accounts for almost 18% of all Android devices on the market (kinda surprising, I know).

|

| Android market saturation as of October 26, 2018, retrieved on November 27, 2018 from https://developer.android.com/about/dashboards/ |

The issue here is that although according to the Google Play Store I was updating to the latest version of Skype, in reality because the "new" versions of Skype are not compatible with older Android versions (by default the SM-G925F (told you that you would see it again) ships with Android 5), it was actually installing "Skype Lite". Even though, as you can clearly see from the screenshots, the Google Play Store was telling me that "Skype" was indeed installed, and "Skype Lite" was not.

|

| "Skype" application information from the S6 Edge |

|

| According to this, Skype is installed |

|

| This screen suggests that "Skype Lite" is a different application |

|

| This confirms that, according to the Play Store, "Skype Lite" is indeed a different application on my Galaxy S6 Edge running Android 5.1.1 |

Chapter 5: Whew. No changes. But support is needed

As you may have guessed, Skype Lite actually stores data in a different fashion than traditional Skype itself. Most of the tools on the market today are set to handle Skype data, but not Skype Lite. This is the reason that AXIOM did not detect Skype data, because it does not (yet) have the support for Skype Lite, it only has support for Skype itself. And although it was initially suspected, Skype itself did not undergo a drastic change, it was just a combination of things that resulted in Android/Google Play/Skype doing something that was totally unexpected, because of the the base installation of Android that was running.

NOTE: If I was running Android 6 or later on the device, the aforementioned tools should parse the data, but we will have to hold onto that thought for testing for another day :)

Chapter 6: The Grand Finale

If you made it all the way down here, congratulations for sticking with this adventure. It has definitely been a fun one!

Always remember that, at the end of the day, tools are just tools, and they have limitations and shortcomings. In a perfect world every tool could handle all the data from every application from every device. But we all know that is not going to happen. Don't be afraid to dig into the data itself, because you might find that an entire data structure is not being parsed properly. Or that the formats have changed. Or, you may find through a series of events that your device is running a different application, with a different storage structure, than what the device is "telling" you what is really running!

Wednesday, August 8, 2018

Live Response Collection Development Roadmap for 2018

Hello again readers and welcome back! It's been a little while ...OK, a long while... since I've made updates to the Live Response Collection. Rest assured for those of you who have used, and continue to use it, that I am still working on it, and trying to keep it as updated as possible. For the most part it has far exceeded my expectations and I have heard so much great feedback about how much easier it made data collections that users and/or businesses were tasked with. The next version of the LRC will be called Cedarpelta, and I am hoping for the release to take place by the end of this year.

As most Mac users have likely experienced by now, not only has Apple implemented macOS, they have also changed the file system to APFS, from HFS+. Because the Live Response Collection interacts with the live file system, this really does not affect the data collection aspects of the LRC. Although it DOES affect third party programs running on a Mac, as detailed in my previous blog post.

Although the new operating system updates limits what we can collect leveraging third party tools, there are a plethora of new artifacts and data locations of interest, and to ensure the LRC is collecting data points of particular interest, I've been working with the most knowledgeable Apple expert that I (and probably a large majority of readers) know, Sarah Edwards (@iamevltwin and/or mac4n6.com). As a result of this collaboration, one of the primary features of the next release of the LRC will be much more comprehensive collections from a Mac!

For the vast majority of you who use the Windows version of the Live Response Collection, don't fret, because there will be updates in Cedarpelta for Windows as well! These will primarily focus on Windows 10 files of interest, but also will include some additional functionality for some of the existing third-party tools that it leverages, like autoruns.

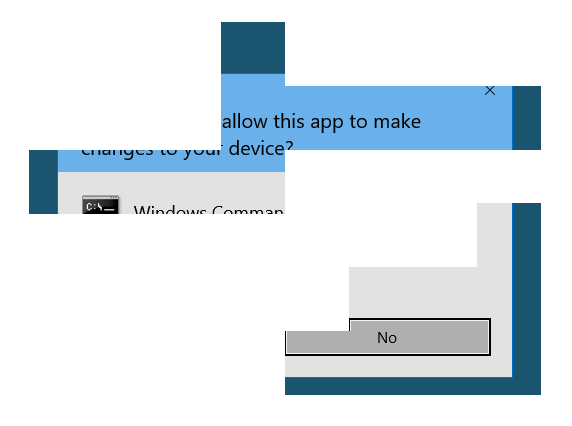

|

| Autoruns 13.90 caused an issue, and was fixed very quickly once the issue was reported (thanks to @KyleHanslovan) |

As always, if there are any additional features that you would like to see the LRC perform, please reach out to me, through Twitter (@brimorlabs or @brianjmoran) or the contact form on my website (https://www.brimorlabs.com/contact/) or even by leaving a comment on the blog. I will do my best to implement them, but remember, the LRC was developed in a way that allows users to create their own data processing modules, so if you have developed a module that you regularly use, and you would like to (and have the authority to) share it, please do, as it will undoubtedly help other members of the community as well!

Tuesday, July 17, 2018

Let's Talk About Kext

Hello again readers and welcome back! Today's blog post is going to cover some of the interesting things I found poking around MacOS while developing updates to the Live Response Collection. First off, I have to offer my thanks to Sarah Edwards for taking the time to talk about what she has done with regards to the quirkiness ("official technical term") regarding MacOS, System Integrity Protection ("SIP"), kernel extensions, and everything else that completely derailed my plans for pulling data from a Mac!

Our story begins by trying to diagnose some errors that I noticed while trying to perform a memory dump on my system using osxpmem. The errors were related to my system loading the kernel extension MacPmem.kext, which resulted in the error message "/Users/brimorlabs/Desktop/Cedarpelta-DEV/OSX_Live_Response/Tools/osxpmem_2.1/temp/osxpmem.app/MacPmem.kext failed to load - (libkern/kext) system policy prevents loading; check the system/kernel logs for errors or try kextutil(8).". Even though I was running the script as root, for some reason the kext was failing to load.

|

| That weird error message is weird |

The Live Response Collection script has always changed the owner of the kernel extension, so I knew that ownership was also not the problem, so that left me in a bit of a bind. Fortunately the tool "kextutil" is included on a standard Mac load, so I hoped that running that command could shed some light on my issues. The results from running kextutil were mostly underwhelming, with the exception of .... what the heck is that path?

|

| Output from kextutil. What is "/Library/StagedExtensions/Users/brimorlabs/Desktop/Cedarpelta-DEV/OSX_Live_Response/Tools/osxpmem_2.1/osxpmem.app/MacPmem.kext" and why are you there, and not the folder you are supposed to be in? |

The actual path on disk was "Users/brimorlabs/Desktop/Cedarpelta-DEV/OSX_Live_Response/Tools/osxpmem_2.1/osxpmem.app/MacPmem.kext", but for some reason the operating system was putting it in another spot. OK, that seems really weird, so why is my system doing stuff that I don't specifically want it to do? Oh Apple, how very Python of you! :)

|

| osxpmem/MacPmem.kext related files under the "/Library/StagedExtensions" path |

As it turns out, thanks to quite a bit of research, the "Library/StagedExtensions" folder is, in very basic terms, the sandbox in which MacOS puts things that it does not trust, as a function of SIP. Now, if you were presented with the "Do you trust this extension" prompt ...

|

| This is what the "System Extension Blocked" popup looks like. This is NOT the popup you see with osxpmem |

... then, if you navigate to "Security & Privacy" and click on the "General" tab, and clicked "Allow"

|

| Security & Privacy - "General". Note the "System software from developer REDACTED was blocked from loading" and the "Allow" button |

It would (ok, *should*) then stop the symbolic linking (that is what I assume is happening, although that is not confirmed yet) from the original folder to the StagedExtension folder to allow the sandboxing/SIP to occur. That means that the kernel extension would then be able to run, and the world would be a glorious place. Except.....it seems that once a developer/company signs their kext, which allows the bypassing of SIP, that means that EVERYTHING signed by them in the future will also, automatically, be trusted. Obviously this could present a security issue down the road if those signing certificates would be stolen. I don't know of that happening yet, but it does seem like it is plausible and could presumably happen in the future.

I've tried a couple of workarounds to bypass the SIP process to allow me to dump memory from a system without having to go through all of the bypass sip/csrutil steps (if you are unfamiliar with that, please follow the link here). None of my attempts succeeded yet, but I am still trying. I specifically do NOT want to reboot the computer, because I want to collect memory from the system and not potentially lose volatile data. I will either update this post, or continue this as a series, when I find a sufficient work around (if there is one) to this issue! With that being said, if you found a way to dump memory from a MacOS live system that has SIP enabled, and if you are able to share it publicly (or privately) please share your methodology. I would love for the next LRC update to be able to include memory dumps from systems with SIP enabled!

Sunday, June 17, 2018

Who's Down With PTP?

Our journey begins with using Magnet Axiom (thanks Jessica!) in an attempt to acquire data, and subsequently process that data, from a stock Android device. Following the very concise, user-friendly prompts, all of the steps were properly taken in an effort to acquire the device. However, the first issue arose when the "Trust this computer" prompt never came up on the Aristo itself. Since I've had many experiences with mobile devices in the past, my first thought was to fire up Android Debug Bridge (adb) in an attempt to make sure that adb was properly recognizing the device. Because if adb can't recognize it, acquisition through just about any commercial tool just won't work. Interestingly, choosing the "Charging only" option from the USB option in Developer mode, which is usually the standard in Android device acquisition, results in nothing being recognized in adb.

|

| Charging, the usual method, does not work |

|

| No devices shown in adb |

So, the next step is to see if perhaps we connect with MTP (Media Transfer Protocol), it will allow adb to recognize the device. It is a different protocol, and I know from past experience that sometimes different protocols means the difference between working or not. When I chose MTP from the Developer Options, I was *finally* presented with the desired "Allow USB debugging" prompt, which also lists the unique computer fingerprint. So...success!!

|

| MTP is picked as the USB connection on the device |

|

| Finally, debugging options show up on the device with MTP! |

Or not. adb recognizes the device and allows me to send commands, such as making a backup, but what I need is the Magnet agent to be pushed to the device so we can get the user data, such as SMS, contacts, call history, etc. When connected via MTP, it seems the Aristo allows some data to be transferred from the device to a system, but it does not allow data to go from the system to the mobile device. Curses!! Foiled again!!

|

| abd recognizes the device with MTP. Partial success! |

After deliberating, some adb-kung-fu, and using Google to search for additional options, I decided to try using PTP (Picture Transfer Protocol). adb still recognized the device, however, for reasons that COMPLETELY elude me, setting it up this way allowed not only the backup to be performed, but ALSO allowed data (aka the Magnet agent) to be pushed to the device! At last, I finally had success!

|

| Now we choose the PTP option. For some reason, this choice works!! |

|

| In Axiom, we choose the ADB (Unlocked) method |

|

| Finally! With the PTP connection, AXIOM recognizes the device! |

|

| Ready to start processing! |

|

| Data acquisition as begun at last! |

|

| Our data has been acquired! |

Interestingly enough, however, when I completely cleared the Trusted Devices on the mobile device, I could not get the "Trust Connections from this device" prompt to show up using a PTP connection. So, as long as you follow the method below, you *may* be able to get data from a severely locked down mobile device!

0) Get familiar with using adb from the command line.

It is a free download, and most commercial tools use adb behind the scenes. If you do any work with Android devices, you should know some basics of adb!

1) Connect the device using the MTP protocol.

2) When presented with the Trust Connections prompt on the device, choose OK and make sure the "Always allow from this computer" box is checked

3) Change the connection protocol to PTP

4) Acquire the device using Magnet Axiom

5) ....

6) PROFIT!!

One additional note I would like to add about the Magnet agent when using Magnet Axiom to acquire data from a device. In my opinion, it is very important to choose the "Remove agent from device upon completion" option, found under Settings, when acquiring data from a mobile device. We ran into this issue with the agent being left behind when processing mobile devices during forward deployments. When we had devices associated with high value entities, the final step in data acquisition was that we would have to interact with the device and manually remove the agent and acquisition log(s). (NOTE: it was not Magnet, as they did not exist at the time, it was another vendor who I will not publicly name.) It is entirely up to the end user if they feel comfortable leaving behind an agent or not. I definitely do not and will always choose to remove it. I just wanted to specifically point that out to anyone using Axiom to get data from mobile devices!

|

| To change the agent settings, Open Process, Navigate to Tools, then Settings |

|

| Check the "Restore Device State" box to remove the Magnet agent after acquisition |

Subscribe to:

Comments (Atom)